Written by: Igor

Published: December 2025

Gartner predicts that 85% of AI projects fail to deliver on their intended outcomes. This isn't due to a lack of powerful algorithms; it's the gap between a promising proof-of-concept and a resilient production system. Too many AI initiatives get stuck in the lab, unable to handle real-world data, security threats, and operational demands, representing a massive loss of potential ROI. This article provides an actionable production readiness checklist to ensure your project is part of the 15% that succeeds.

Key takeaways

- De-risk your launch: This checklist covers 10 critical areas from data quality to cost controls to prevent common failure points.

- Move beyond the lab: Learn the steps to ensure your model is scalable, secure, and ready for real-world user traffic.

- Focus on business outcomes: Each checkpoint connects technical requirements to business value, like maintaining user trust and controlling costs.

- Create an actionable plan: Use the provided framework to score your system, identify gaps, and build a prioritized backlog.

1. Data quality and integrity validation

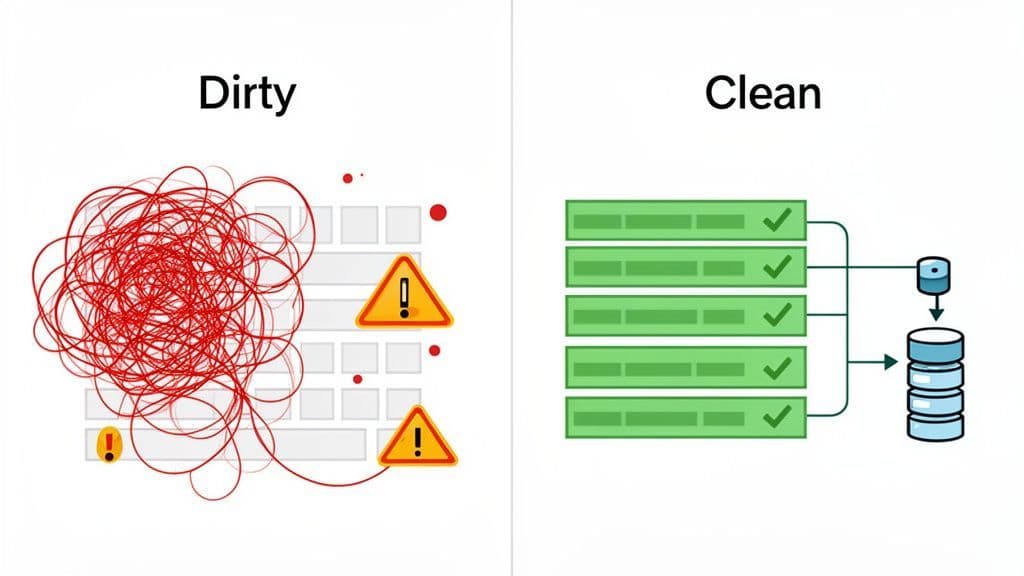

The most sophisticated AI model is worthless if it's fed poor-quality data. Data quality validation is the foundational step in any production readiness checklist, ensuring the data powering your system is clean, consistent, and reliable. This prevents unpredictable and erroneous model behavior in a live environment. For AI systems, the principle of "garbage in, garbage out" is magnified, making this step non-negotiable.

This validation must be automated and embedded into your data pipelines. It’s a continuous process that scrutinizes data for missing values, outliers, bias, and distribution drift-where production data diverges from training data, silently degrading model performance.

Actionable tips for implementation

- Automate in the pipeline: Use tools like Great Expectations or dbt tests to build automated validation checks directly into your ETL/ELT pipelines. This catches issues before they corrupt your production system.

- Tie metrics to business KPIs: Instead of just tracking null percentages, correlate data quality metrics to business outcomes. For example, track how "listing data completeness" impacts booking conversion rates.

- Monitor data distribution drift: Implement a system to continuously compare the statistical distribution of live production data against your training data. Set up alerts for significant deviations to trigger model retraining or investigation. To learn more about building these robust systems, you can explore the principles of effective data engineering services.

2. Model performance benchmarking and validation

An AI model that performs well in a lab can fail spectacularly in the real world. Model performance benchmarking is the rigorous process of testing a model against predefined business and technical criteria before it impacts a user. It ensures your model not only meets accuracy targets but also satisfies operational requirements like speed and throughput. This step acts as the final quality gate in your production readiness checklist.

This process involves validating performance on a curated, production-representative test set that covers expected scenarios, edge cases, and diverse user segments. By establishing clear, quantitative benchmarks, you create an objective standard that a model must meet to be considered "production-ready."

Actionable tips for implementation

- Define success with business stakeholders: First, ask what business outcome the model supports-increasing revenue, improving efficiency, or raising customer satisfaction. Translate those goals into model metrics like precision, recall, or latency.

- Establish performance "guardrails": Define non-negotiable thresholds the model cannot cross. For example, a fraud detection model must maintain a false positive rate below 0.1% to avoid blocking legitimate customers.

- Use stratified test sets: Ensure your test data accurately represents your user base, including minority classes and critical edge cases. A model that performs well on average can still fail spectacularly for important user segments.

- Monitor tail latency: Average response time can be misleading. Monitor the 99th percentile (p99) latency to understand the experience of your slowest-served users. A high p99 latency indicates a potential bottleneck.

3. Security and access control verification

An AI system, no matter how powerful, is a massive liability if it's not secure. Security and access control verification is the critical process of hardening your system against unauthorized access, data breaches, and malicious manipulation. Neglecting this step in your production readiness checklist exposes your intellectual property and sensitive customer data, risking severe financial and reputational damage. According to a report by McKinsey, cybersecurity is a top concern for executives adopting AI.

This verification is a comprehensive framework that includes secrets management, role-based access control (RBAC), and network isolation. For AI systems, the threat is twofold: data theft and model manipulation. Security must be baked into the system's architecture from day one.

Actionable tips for implementation

- Use environment-specific secrets: Never use the same credentials for development, staging, and production. Utilize a secrets manager like HashiCorp Vault or AWS Secrets Manager to inject separate credentials for each environment.

- Implement API rate limiting and authentication: Secure any model inference endpoint with robust authentication and implement strict rate limiting. This is your first line of defense against denial-of-service attacks.

- Enable detailed audit logging from day one: Log every access request, model prediction, and configuration change. These logs are invaluable for security forensics and compliance audits.

- Conduct a pre-deployment security review: Partner with your security team to perform a thorough review before going live. They can help identify vulnerabilities in network configurations, container security, and access policies.

4. Infrastructure capacity and scalability assessment

An AI system that collapses under its first real-world traffic spike is a production failure. Infrastructure capacity and scalability assessment ensures your system can handle expected production loads without degrading performance. This involves testing compute resources, database capacity, and network bandwidth to prevent outages and slow response times. It's the engineering due diligence that separates a fragile prototype from a resilient, enterprise-grade application.

This process requires proactively modeling future growth, stress-testing system breakpoints, and configuring automated scaling mechanisms. For AI applications, where resource demands for inference can be intense and spiky, a robust scalability plan is a core component of a successful production readiness checklist.

Actionable tips for implementation

- Conduct realistic load tests: Use tools like k6 or Locust to script tests that mimic real, complex user journeys and access patterns to find hidden bottlenecks.

- Establish clear SLOs first: Before deploying, define and agree upon Service Level Objectives (SLOs) for key metrics like p99 latency and system availability. Your capacity plan should be designed to meet these targets.

- Test auto-scaling policies: Configure and test your auto-scaling rules in a staging environment. Ensure they trigger reliably to scale resources up during spikes and scale down efficiently to control costs.

- Plan for 2x peak capacity: A good rule of thumb is to provision or have the ability to scale to at least double your expected peak load. This provides a buffer for unexpected traffic surges. When building your infrastructure for AI, choosing the right hardware, such as harnessing the power of AI/ML dedicated servers, can be a key part of this strategy.

5. Monitoring, observability, and alerting setup

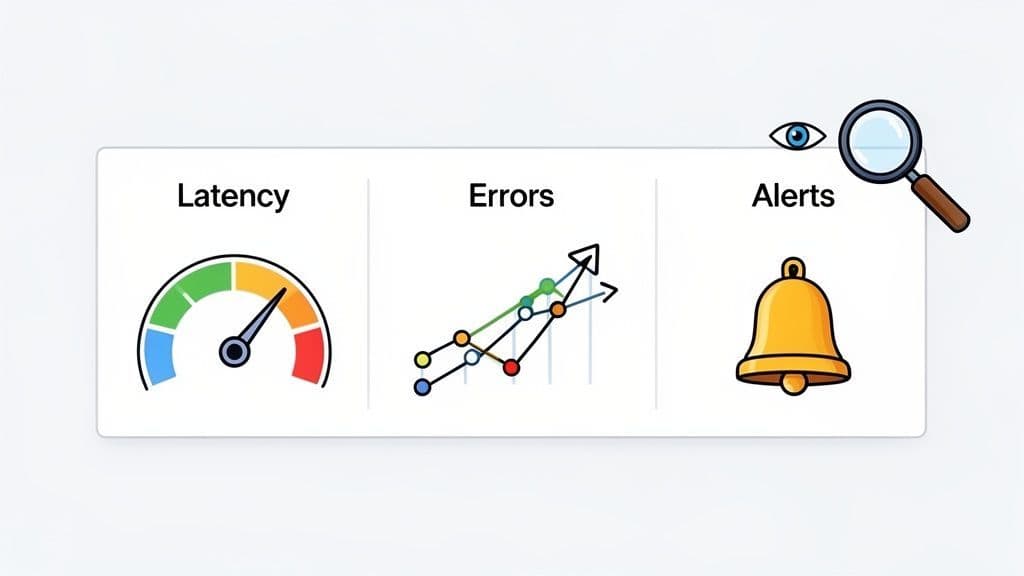

Once a system is live, you can't fly blind. Monitoring, observability, and alerting are the essential feedback mechanisms that tell you how your system is performing. This involves implementing comprehensive logging, metrics collection, and tracing to gain a real-time understanding of system health. Without this setup, you are waiting for customers to report problems. A robust monitoring strategy is a core component of any production readiness checklist.

This setup isn't just about infrastructure health-it’s about observing the entire system, from hardware utilization to the specific predictions an AI model makes. This holistic view, often called AI observability, is crucial for maintaining reliability and trust in automated systems.

Actionable tips for implementation

- Define SLOs and track SLIs: Start by defining Service Level Objectives (SLOs) tied to user experience, such as "99.5% availability" or "p99 API latency < 200ms". Then, track the Service Level Indicators (SLIs) that measure them.

- Monitor model and business metrics: Go beyond infrastructure health. Track model-specific metrics like prediction latency, inference error rates, and input data drift. Correlate these with business KPIs.

- Create role-based dashboards: Build separate dashboards for different audiences. An engineering dashboard might show detailed system logs, while a leadership dashboard would focus on high-level SLOs and business impact.

- Set impact-driven alerts with runbooks: Configure alerts based on potential business impact to avoid alert fatigue. Every alert must link to a runbook with clear, step-by-step instructions for resolution.

6. Error handling, graceful degradation, and fallback mechanisms

Even the most robust AI models can fail. Failures are inevitable. Error handling and graceful degradation are the critical safety nets in your production readiness checklist that ensure your system remains resilient even when its core AI components falter. This approach moves beyond simple "pass/fail" logic, designing systems that can maintain partial functionality and prevent a single point of failure from causing a total system collapse.

This strategy involves planning for specific failure modes and creating predefined fallback mechanisms. Instead of showing a cryptic error message, the system defaults to a stable, predictable state, preserving the user experience and preventing cascading failures.

Actionable tips for implementation

- Define failure modes: Map out potential failure points for your AI service-timeout, invalid output, service unavailable-and define a specific fallback response for each one. This could be serving a cached result or using a simpler rule-based model.

- Implement circuit breakers: Use a circuit breaker pattern to automatically stop sending requests to a failing AI service. This protects the failing service from being overwhelmed, allowing it time to recover.

- Use feature flags for new models: Deploy new AI features behind feature flags. If the new model causes issues, you can instantly disable it for all users with a single click, reverting to the stable version without a full redeployment.

- Test your fallback paths: Your "unhappy paths" are just as critical as your "happy paths." Write automated tests that simulate AI service failures and verify that your fallback mechanisms trigger correctly.

7. Compliance, privacy, and regulatory requirements verification

Launching a system without verifying its legal standing is a massive, avoidable risk. This step ensures your system adheres to regulations like GDPR, CCPA, and HIPAA, protecting customer data and avoiding crippling fines. It involves a thorough assessment of data handling practices, from collection and residency to consent management and deletion policies.

This process is an integrated part of the development lifecycle. It requires embedding privacy-by-design principles from the beginning. From an AI perspective, this includes auditing how Personally Identifiable Information (PII) is handled in training datasets and ensuring retention policies are programmatically enforced, making this a critical component of any production readiness checklist.

Actionable tips for implementation

- Conduct a Privacy Impact Assessment (PIA) early: A PIA performed during the design phase helps identify and mitigate privacy risks before they are built into the system's core architecture.

- Document everything for the audit trail: Meticulously record all data sources, processing activities, retention periods, and the business purpose for each. This documentation is invaluable for demonstrating compliance.

- Implement privacy-by-design: Build your system to minimize data collection from the start. Anonymize or pseudonymize data wherever possible and establish automated data deletion policies.

- Collaborate with legal and compliance teams: Treat your legal and compliance experts as core project stakeholders. Involve them during design and development, not just for a final review. For ensuring the secure handling of customer data, a practical guide to achieving SOC 2 compliance can be an invaluable resource.

8. Model versioning, rollback, and deployment strategy testing

Deploying a new model into production shouldn't be a leap of faith. A robust deployment strategy, complete with versioning and rapid rollback capabilities, is a critical safety net. This process involves a disciplined approach to introducing changes, monitoring their impact, and having an automated plan to revert if things go wrong. Without it, a single faulty model deployment can degrade user experience or erode customer trust in minutes.

Effective deployment isn't about avoiding failure but about minimizing its blast radius. Strategies like canary releases (exposing a new model to a small subset of users) allow you to validate performance with live traffic before committing to a full rollout, making your deployment process a key part of your production readiness checklist.

Actionable tips for implementation

- Start with canary deployments: Never deploy a new model to 100% of traffic at once. Begin with a small, monitored group (1-5%) to validate performance and catch unforeseen issues.

- Define automated rollback triggers: Set clear, automated triggers based on key metrics. For example, "if p99 latency increases by over 20% or the error rate exceeds 0.5%, automatically revert to the previous version."

- Use shadow mode for pre-validation: Before a canary release, deploy your new model in "shadow mode" to run alongside the current production model. It receives live traffic but its predictions aren't served to users, allowing you to compare performance without risk.

- Keep multiple versions ready: Store several previous stable model versions, not just the last one. This provides more options for a quick rollback. You can learn more about the tools that facilitate these processes by exploring the best MLOps platforms.

9. Cost monitoring and budget controls

An AI system that breaks the bank is not production-ready. Cost monitoring and budget controls are essential guardrails that prevent your AI solutions from becoming financially unsustainable. This practice involves tracking all associated costs-compute, storage, and third-party API calls-against a predefined budget. By establishing cost baselines and implementing controls, you ensure your system delivers a positive return on investment.

Effective cost management requires a continuous feedback loop where you identify key cost drivers and optimize inefficient processes. Without this discipline, a successful model that scales rapidly can paradoxically lead to financial distress, making this a critical component of any production readiness checklist.

Actionable tips for implementation

- Break down costs by component: Use cloud provider tools like AWS Cost Explorer to tag resources and dissect your bill. Identify the biggest drivers, which are often model inference endpoints or intensive training jobs.

- Monitor third-party API spend: API costs, especially for services like generative AI models, can spiral quickly. Implement strict rate limiting, caching strategies, and alerts to control usage before it exceeds budget.

- Implement chargeback or showback: Attribute costs back to the specific product teams consuming the resources. This creates direct accountability and aligns incentives for efficiency.

- Establish and trend cost baselines: Establish a baseline cost-per-prediction or per-user. Monitor this metric over time to ensure that cost growth is aligned with business growth, not accidental waste.

10. Documentation, runbooks, and knowledge transfer

A system that only one person understands is a production incident waiting to happen. Comprehensive documentation is the institutional memory of your AI system. This critical component of any production readiness checklist covers everything from system architecture and model logic to deployment procedures and operational runbooks. It enables teams to operate, troubleshoot, and evolve the system reliably without depending on the original creators.

Effective documentation transforms a complex system into a manageable asset. It reduces incident response times, accelerates onboarding, and prevents knowledge loss.

Example workflow: Incident response runbook

- Alert fires: PagerDuty alert: "Model X prediction latency p99 > 500ms for 5 mins."

- Acknowledge and investigate: On-call engineer checks the linked Grafana dashboard.

- Initial diagnosis: Dashboard shows a spike in CPU utilization on inference servers.

- Action: Engineer consults the runbook, which suggests scaling the inference cluster horizontally.

- Resolution: Engineer executes the documented scaling command. Latency returns to normal within 3 minutes.

- Post-mortem: Team documents the incident and reviews auto-scaling policies to prevent recurrence.

Actionable tips for implementation

- Create runbooks before you deploy: Build detailed, step-by-step incident response guides covering common failure scenarios, such as "model predictions are returning nulls" or "data pipeline is stalled."

- Use Architecture Decision Records (ADRs): Document why you chose a specific technology or design pattern. An ADR captures the context and consequences of a significant architectural decision.

- Maintain a 'Model Card' for every model: This document should detail the model's intended use cases, performance metrics, inputs, outputs, and known limitations.

- Schedule regular documentation reviews: Institute a quarterly review process to update runbooks, architecture diagrams, and model cards to reflect the current state of the production system.

Your next step: from checklist to action plan

You now have a blueprint for launching AI systems with confidence. The difference between a successful launch and a failed pilot often comes down to disciplined preparation. This production readiness checklist is your guide. We've walked through ten critical pillars, from data quality and security to cost monitoring and documentation.

The core takeaway is that production readiness is a continuous state of preparedness. It’s a culture of anticipating failure, measuring performance, and ensuring every component is secure, scalable, and compliant.

To create real value, transform this checklist into an action plan. Start by treating this process like a mini-audit. Score your current system for each of the ten items using a "Red, Yellow, Green" status. Then, prioritize the top three gaps that pose the highest risk to your launch and create specific, owner-assigned tickets in your project management tool.

By converting this comprehensive production readiness checklist into a prioritized set of actionable tasks, you bridge the crucial gap between strategy and execution.

We at N² labs can help you navigate the complexities of AI production readiness. Our independent AI readiness assessment delivers a clear scorecard and a prioritized, actionable roadmap, helping you move from pilot to production with speed and confidence.

FAQ

A production readiness checklist is a systematic tool used to verify that a software system, particularly an AI or data-driven application, is prepared for deployment to a live environment. It covers critical areas like data quality, security, scalability, monitoring, and compliance to de-risk the launch and ensure reliability.

AI models have unique failure modes compared to traditional software. A specific software readiness checklist for AI is crucial because it addresses challenges like data drift, model performance degradation, and the high computational costs of inference. It ensures the model is not only accurate but also robust, secure, and cost-effective in production.

To ensure infrastructure readiness, you must perform a thorough capacity and scalability assessment. This involves conducting realistic load tests that mimic user behavior, defining and testing auto-scaling policies, and provisioning for at least double your expected peak traffic. This process validates that your system can handle real-world demand without performance degradation.

An operational readiness review (ORR) typically includes verifying monitoring and alerting setups, confirming that detailed documentation and runbooks are in place, validating error handling and fallback mechanisms, and ensuring a tested deployment and rollback strategy exists. This review confirms the team is equipped to operate and support the system once it is live.