Written by: Igor

Published: December 2025

Getting an AI model from a Jupyter notebook to a production system that generates revenue is where most AI initiatives stall. Many companies struggle to operationalize their models, a challenge highlighted by a recent Gartner report showing that only 54% of AI projects make it from pilot to production. This gap isn't a failure of the model-it's a failure of operations. MLOps (Machine Learning Operations) provides the framework to deploy, monitor, and govern models, turning costly experiments into core business drivers.

This guide cuts through the hype to provide a clear, practical comparison of the best MLOps platforms available today. We'll analyze the top 12 solutions, from enterprise ecosystems like AWS SageMaker to open-source standards like MLflow, so you can find the right fit for your team and budget. You will learn how to evaluate platforms based on your current tech stack, team skills, and business goals.

Key takeaways

- Start with your team: Choose a platform that matches your team's existing skills-whether they are Python-focused data scientists or Kubernetes-native engineers.

- Evaluate total cost: "Free" open-source tools require significant engineering overhead, while managed platforms can offer a faster ROI by reducing operational workload.

- Prioritize integration: The best MLOps platforms connect seamlessly with your existing data warehouses, source control, and CI/CD pipelines.

- Test with a pilot project: The only way to know for sure is to run a small, time-boxed proof-of-concept with your top 2-3 choices.

Understanding the MLOps landscape

The MLOps market is fragmented, ranging from all-in-one platforms offered by major cloud providers to specialized, best-of-breed tools and flexible open-source frameworks. Choosing the right one depends entirely on your specific context: your cloud environment, team expertise, budget, and governance requirements.

A startup already running on AWS will likely find Amazon SageMaker a natural extension of its stack. In contrast, an enterprise with a multi-cloud strategy and strong in-house engineering may prefer the control and portability of an open-source solution like Kubeflow.

The top platforms: cloud providers

For teams already invested in a major cloud ecosystem, the native MLOps platforms are often the path of least resistance. They offer deep integration, consolidated billing, and familiar security models.

1. Amazon SageMaker (AWS)

Amazon SageMaker is a fully-managed platform covering the entire machine learning lifecycle. It offers tools for data preparation, model building, training, deployment, and monitoring. Its primary strength is its deep, native integration with the expansive AWS ecosystem, making it a strong contender for one of the best MLOps platforms for teams already invested in AWS.

This tight integration allows for robust security through AWS Identity and Access Management (IAM) and Virtual Private Cloud (VPC). The platform supports a vast array of compute instances, including specialized hardware for cost-effective inference. If you're heavily reliant on AWS, SageMaker is a natural and powerful extension. You can learn more by conducting an AI readiness assessment for your current stack to see how SageMaker might fit.

Key features & considerations

- Best for: Organizations deeply integrated with AWS seeking an end-to-end, enterprise-grade solution.

- Pricing: Pay-as-you-go. While flexible, this can lead to complex and hard-to-predict costs.

- Pros: Unmatched integration with AWS services, extensive security controls, and elastic scaling.

- Cons: Steep learning curve, and the pricing model requires diligent management to avoid unexpected expenses.

- Website: aws.amazon.com/sagemakerbsite: aws.amazon.com/sagemaker

2. Google Cloud Vertex AI

Google Cloud Vertex AI is a unified MLOps platform that consolidates Google's AI services into a single environment. It streamlines the entire ML lifecycle, from data preparation with BigQuery to model training, deployment, and monitoring. Its key differentiator is the seamless integration with Google's powerful data and analytics ecosystem.

This connection allows data scientists to build models directly on data in BigQuery without complex data movement, accelerating development. The platform also offers first-class support for Tensor Processing Units (TPUs), Google's custom hardware for AI, providing a performance advantage for large-scale training.

Key features & considerations

- Best for: Companies committed to Google Cloud Platform, especially those using BigQuery and GKE.

- Pricing: A granular, pay-as-you-go model for each service component.

- Pros: Excellent integration with GCP services, robust built-in model monitoring, and access to high-performance TPUs.

- Cons: Primarily beneficial for organizations already within the GCP ecosystem. The pricing structure can be complex to predict.

- Website: cloud.google.com/vertex-ai

3. Microsoft Azure Machine Learning

Microsoft Azure Machine Learning is an enterprise-grade platform that provides a comprehensive MLOps environment, including a visual designer and automated ML capabilities. Its key differentiator is seamless integration with the broader Azure stack, making it one of the best MLOps platforms for organizations committed to Microsoft's cloud.

This allows teams to leverage existing security frameworks like Azure Active Directory and works natively with Azure DevOps and GitHub Actions. This enables established CI/CD practices to be extended directly into the machine learning lifecycle. If your organization operates heavily within the Microsoft ecosystem, Azure Machine Learning offers a powerful and cohesive MLOps experience.

Key features & considerations

- Best for: Enterprises standardized on Microsoft Azure that require strong governance and security.

- Pricing: No separate platform fee; you pay for the underlying Azure compute, storage, and networking resources.

- Pros: Strong governance and security alignment, native integration with Microsoft tooling, and a unified environment.

- Cons: The learning curve can be steep for those unfamiliar with the Azure ecosystem.

- Website: azure.microsoft.com/en-us/products/machine-learning

The top platforms: end-to-end solutions

These platforms aim to provide a comprehensive, often cloud-agnostic solution that covers the entire ML lifecycle. They are ideal for organizations seeking a unified environment that isn't tied to a single cloud provider.

4. Databricks Data Intelligence Platform (Lakehouse)

The Databricks platform positions itself as a unified solution for data and AI, built on an open lakehouse architecture. It breaks down silos between data engineering, analytics, and machine learning. This makes it one of the best MLOps platforms for organizations aiming to streamline the entire data-to-model pipeline in a single environment, with consistent deployment across AWS, Azure, and GCP.

Databricks integrates MLflow natively, providing robust tools for experiment tracking and model registry services. Governance is centralized through its Unity Catalog, which manages access controls and lineage for data, features, and models-a critical component for enterprise-grade MLOps.

Key features & considerations

Key features & considerations

- Best for: Data-centric organizations looking to unify data engineering, analytics, and ML on a cross-cloud platform.

- Pricing: A pay-as-you-go model based on Databricks Units (DBUs). Costs can scale significantly with large workloads.

- Pros: Excellent for unifying data and AI teams, strong multi-cloud support, and deep integration with MLflow.

- Cons: Can introduce operational complexity. Costs can become substantial without disciplined resource monitoring.

- Website: www.databricks.com

5. DataRobot

DataRobot is an enterprise AI platform designed for end-to-end automation, from data preparation and AutoML to MLOps and governance. It's positioned for organizations that need to rapidly build and manage models at scale. Its key differentiator is a heavy emphasis on governance and flexible deployment options, including on-premise, hybrid, and multi-cloud. The MLOps market is expected to grow to $16.9 billion by 2028 (source: MarketsandMarkets), and platforms with strong governance are poised to capture a significant share.

DataRobot's integrated approach allows teams to deploy models, continuously monitor performance, and automate retraining pipelines with built-in compliance checks. For teams evaluating such a robust system, it's useful to understand how to implement AI in business to map platform capabilities to strategic goals.

Key features & considerations

- Best for: Large enterprises and regulated industries requiring mature governance and auditable MLOps workflows.

- Pricing: Enterprise-focused pricing that is not publicly listed. Procurement is handled through a sales-led process.

- Pros: Strong enterprise governance features, flexible deployment models, and broad industry adoption.

- Cons: Lack of transparent pricing makes it less accessible for smaller teams. Best suited for larger organizations.

- Website: www.datarobot.com

6. Domino Data Lab

Domino Data Lab is an enterprise MLOps platform focused on providing governed, self-service infrastructure for data science teams. It centralizes the entire model lifecycle with a strong emphasis on security, reproducibility, and collaboration. The platform's main value is its ability to serve large, regulated organizations that need a flexible yet controlled environment.

Domino offers one-click access to elastic compute and popular IDEs, letting data scientists work in familiar environments. This is coupled with a robust model registry and integrated monitoring for model drift. Its flexible deployment options make it a versatile choice for enterprises with specific data residency or security requirements.

Key features & considerations

- Best for: Large, regulated enterprises in sectors like finance and life sciences that require stringent governance and predictable costs.

- Pricing: Quote-based, user-centric subscription model.

- Pros: Highly predictable user-based subscription model. Built for enterprise governance with flexible deployment.

- Cons: Overly complex and expensive for smaller teams. Direct vendor engagement is required to get pricing.

- Website: domino.ai

7. H2O.ai (H2O AI Cloud)

H2O.ai offers an AI platform that combines its strengths in AutoML and Generative AI with core MLOps capabilities. It is designed to accelerate the creation and deployment of AI models. The platform's standout feature is its powerful, automated approach to model development, coupled with a strong emphasis on explainability.

This makes H2O AI Cloud one of the best MLOps platforms for teams that prioritize automation and interpretability. Its availability in secure, air-gapped configurations makes it a compelling choice for government agencies and companies in finance or healthcare.

Key features & considerations

- Best for: Organizations in regulated sectors that require powerful AutoML, model explainability, and secure deployment options.

- Pricing: Available directly or through cloud marketplaces like AWS and Azure.

- Pros: Strong AutoML and explainability features, options for secure/private deployments, and clear trial paths.

- Cons: The breadth of features can necessitate a guided onboarding process.

- Website: h2o.ai

The top platforms: specialized and open-source tools

This category includes tools that excel at specific parts of the MLOps lifecycle, as well as open-source frameworks that provide maximum flexibility and control.

8. Weights & Biases (W&B)

Weights & Biases is a developer-first MLOps platform that excels at experiment tracking, model evaluation, and artifact versioning. It is popular for its ease of use and powerful visualization tools that help teams track, compare, and reproduce their work. It integrates seamlessly into existing ML workflows without requiring a complete overhaul of the stack.

W&B's core strength is providing a centralized system of record for the entire ML lifecycle. This includes logging metrics, hyperparameters, and model artifacts with full lineage. Its focus on developer experience makes it one of the best MLOps platforms for teams prioritizing rapid iteration.

Key features & considerationsBest for: ML teams of all sizes that need a best-in-class tool for experiment tracking and model reproducibility.

- Pricing: Offers a generous free tier for individuals. Paid tiers (Pro and Enterprise) are available for teams.

- Pros: Extremely easy to adopt and integrate. The community support is extensive, with transparent pricing tiers.

- Cons: May need to be supplemented with other tools for production model serving and infrastructure orchestration.

- Website: wandb.ai

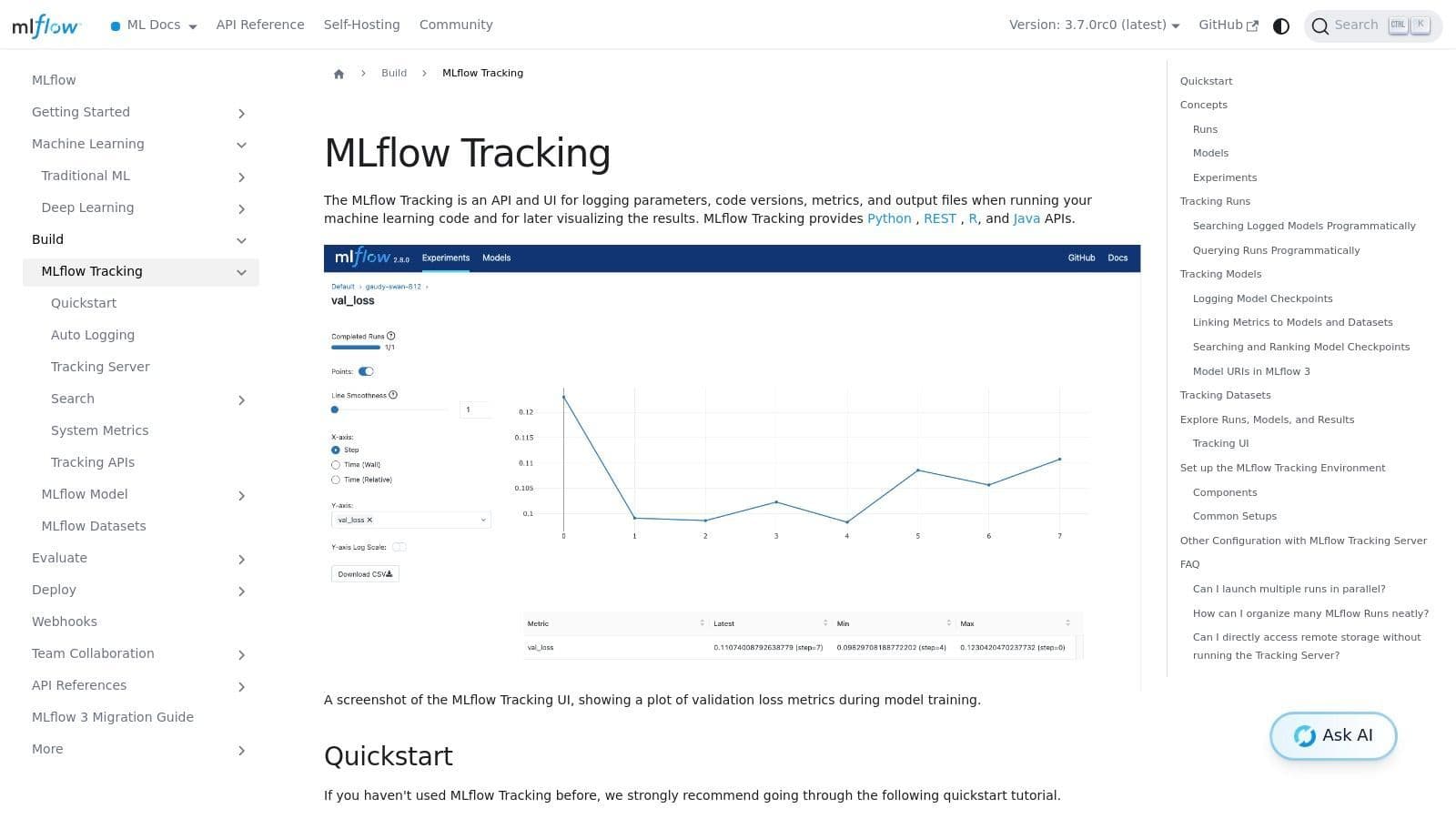

9. MLflow (Open-source MLOps)

MLflow is a leading open-source platform for managing the machine learning lifecycle. Its primary appeal is its vendor-neutral, portable nature, allowing teams to avoid lock-in. As one of the best MLOps platforms for teams prioritizing flexibility, it provides a standardized way to log parameters, metrics, and artifacts.

The platform has four components: Tracking, Projects, Models, and a Model Registry. This modularity means you can adopt only the parts you need. Since MLflow is open-source, the responsibility for hosting and scaling falls on your team, but this grants unparalleled control.

Key features & considerations

- Best for: Teams seeking a flexible, open-source foundation for their MLOps practices to avoid vendor lock-in.

- Pricing: Free and open-source. Costs are associated with the underlying infrastructure required to host it.

- Pros: Highly portable and framework-agnostic. The active open-source community provides strong support.

- Cons: Requires significant internal effort to deploy, manage, scale, and secure.

- Website: www.mlflow.org/docs/latest/ml/tracking

10. Kubeflow (Open-source MLOps on Kubernetes)

Kubeflow is a Kubernetes-native, open-source platform designed to make ML workflows on Kubernetes simple and scalable. It provides a modular toolkit, including Kubeflow Pipelines for orchestration and KServe for model serving. Its core strength is its cloud-agnostic nature; it runs consistently across any cloud provider or on-premise data center.

This platform is ideal for teams that prioritize portability. This flexibility, however, comes with the responsibility of setup and maintenance. Organizations considering Kubeflow should have strong Kubernetes expertise. Successfully navigating these common AI implementation challenges is key to leveraging its full potential.

Key features & considerations

- Best for: Organizations with a strong Kubernetes foundation seeking a customizable, cloud-agnostic framework.

- Pricing: Completely free and open-source. Costs are based on the underlying Kubernetes cluster resources.

- Pros: Runs anywhere Kubernetes is available, avoiding vendor lock-in. Highly composable and benefits from strong community support.

- Cons: Requires significant operational overhead for setup and maintenance. Has a steeper learning curve than managed services.

- Website: www.kubeflow.org

Research and aggregation resources

These resources are not platforms themselves but are critical for the evaluation and procurement process, providing peer reviews and simplified deployment.

11. AWS Marketplace (MLOps solutions)

AWS Marketplace is a digital catalog for discovering and deploying third-party software, including a wide array of MLOps platforms. It's a hub for finding specialized tools that can be launched directly into an existing AWS environment. This is powerful for teams that need to augment their current stack with specific capabilities.

The primary advantage is its deep integration with AWS accounts. Billing is consolidated into a single AWS invoice, and deployments often use pre-configured templates for rapid setup. This streamlines governance and simplifies adopting new tools.

Key features & considerations

- Best for: Companies on AWS needing to quickly deploy third-party MLOps tools with consolidated billing.

- Pricing: Varies widely by vendor and product, from free trials to subscriptions, all consolidated on the AWS bill.

- Pros: Centralized procurement and billing, fast deployment of pre-configured solutions, and a diverse catalog.

- Cons: Quality and support can vary significantly, requiring careful vetting of each vendor.

- Website: aws.amazon.com/marketplace

12. G2 - MLOps platforms category

While not a platform, the G2 category for MLOps Platforms is an indispensable research tool. It aggregates peer-based user reviews, satisfaction scores, and detailed feedback on leading solutions. This provides a practical reality check on usability, implementation challenges, and customer support quality.

Navigating this resource involves using filters to narrow down platforms by company size and required features. By comparing head-to-head reviews, you can identify common pain points and benefits that might not be obvious from a vendor’s website, making it a crucial step in finding one of the best MLOps platforms for your needs.

Key features & considerations

- Best for: Teams in the research phase seeking unbiased, crowd-sourced feedback to compare and shortlist vendors.

- Pricing: Free to browse reviews and comparison data.

- Pros: Provides an unfiltered look at user satisfaction, support quality, and ease of use.

- Cons: Review quality and quantity can be inconsistent. It is a research aggregator, not a vendor.

- Website: www.g2.com/categories/mlops-platforms

A simple framework for choosing your platform

Analysis paralysis is the enemy of progress. The goal is not to find the perfect platform on paper, but to find the right platform for your real-world use case. Use this simple checklist to guide your decision.

- [ ] Assess your team's skills: Does your team live in Python notebooks or Kubernetes dashboards? Match the tool to their expertise.

- [ ] Calculate total cost of ownership: Factor in both subscription fees and the internal engineering hours required for setup and maintenance.

- [ ] Map out key integrations: Does the platform connect easily with your data warehouse (e.g., Snowflake, BigQuery) and CI/CD tools (e.g., GitHub Actions, Jenkins)?

- [ ] Define a pilot project: Select a valuable but non-critical use case, like a lead scoring model, to test your top 2-3 choices.

- [ ] Run a time-boxed bake-off: Give a small team 2-4 weeks to implement the pilot on each shortlisted platform and evaluate the results.

This structured, hands-on approach will quickly reveal the practical strengths and weaknesses of each solution, moving you beyond marketing claims to tangible evidence.

What to do next

Choosing the right MLOps platform is a critical step in building a scalable AI capability. The key is to align your choice with your team's skills, your existing infrastructure, and your budget. By using the framework above and running a hands-on pilot, you can confidently select one of the best MLOps platforms to turn your AI models into reliable, value-generating assets.

We at N² labs can help you design and implement the exact MLOps infrastructure you need to turn AI concepts into production reality. If you need an expert partner to help navigate the selection process and build a scalable AI roadmap, schedule a consultation with our team

FAQ

An MLOps platform is a software solution designed to streamline the machine learning lifecycle. It provides tools for data preparation, model training, deployment, monitoring, and governance, helping teams move models from experiment to production faster and more reliably. The best MLOps platforms unify data scientists, engineers, and operations teams in a single environment.

For a startup, the best MLOps platform is often one that maximizes speed and minimizes operational overhead. This could mean choosing a fully-managed platform from your primary cloud provider (like AWS SageMaker or Google Vertex AI) to leverage existing infrastructure and billing. Alternatively, a developer-first tool like Weights & Biases combined with a simple serving solution can be a cost-effective way to get started.

MLOps (Machine Learning Operations) focuses on the lifecycle of building and deploying machine learning models. Its goal is to make the ML development process repeatable and reliable. AIOps (AI for IT Operations), on the other hand, is about using AI and machine learning techniques to automate and improve IT operations, such as anomaly detection in system logs or predictive maintenance for servers.

Open-source platforms like Kubeflow and MLflow are an excellent choice for teams that require maximum flexibility, want to avoid vendor lock-in, and have strong in-house engineering expertise (especially with Kubernetes). While they are "free," they require significant investment in setup, maintenance, and scaling, so the total cost of ownership should be carefully considered.

A comprehensive MLOps platform typically includes several core components:

- Experiment tracking: To log and compare model training runs.

- Model registry: A central repository to version, store, and manage models.

- Feature store: A centralized place to store and manage curated data features for training and serving.

- CI/CD automation: For automated testing and deployment of models.

- Model serving: Infrastructure to deploy models as APIs for real-time inference.

- Monitoring and observability: To track model performance, detect data drift, and trigger alerts.