Written by: Igor

Published: December 2025

Your AI model works perfectly in the lab, but production is a different story. You're flying blind, unsure if it's helping or hurting your business right now. This is a huge problem because AI failures are often silent - they don't crash your app, they just quietly erode customer trust and tank your metrics. This guide breaks down what AI observability is in practical terms, showing you how to get the visibility you need to trust and scale your AI.

Here’s what you'll learn:

- Why your old monitoring tools are blind to AI failures.

- The four pillars of a strong AI observability strategy.

- A step-by-step roadmap to get started today.

- Common mistakes that waste time and money.

What is AI observability?

AI observability gives you deep, real-time visibility into your AI models after they’re in production. It’s more than just basic monitoring. It helps you understand not just if your model is working, but how and why it’s making the decisions it does.

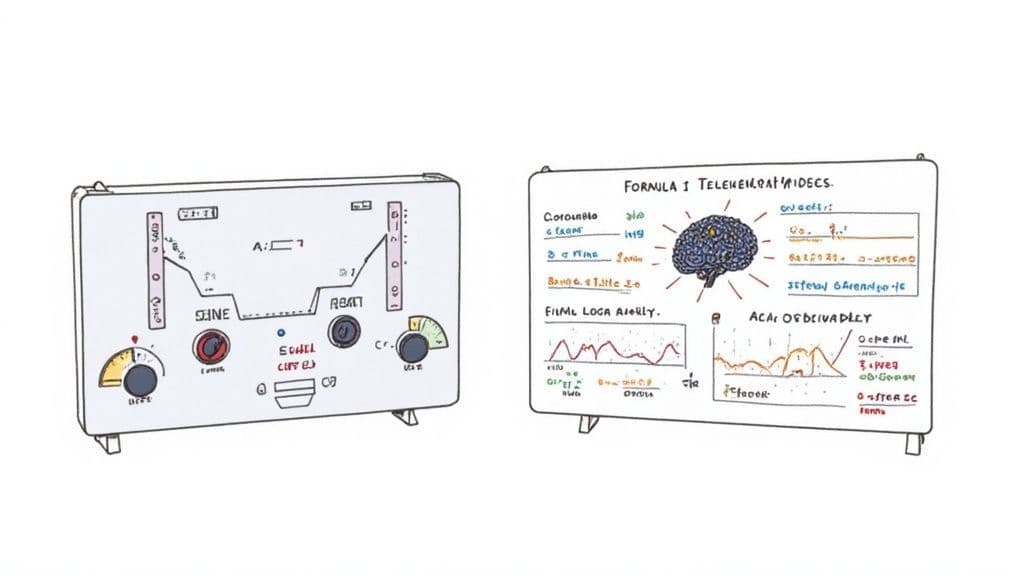

Think of it like an MRI for your AI - it reveals the internal mechanics that a standard dashboard could never show you.

Traditional software monitoring is like your car’s dashboard. It gives you the essentials: speed, fuel, and engine temperature. It tells you if the system is "on" or "off." That’s important, but it’s nowhere near enough for a complex AI system.

AI models aren’t static chunks of code. They’re dynamic, constantly learning and interacting with messy, real-world data. This means they can fail in quiet, subtle ways that old-school application performance monitoring (APM) tools were never built to catch.

For instance, your APM tool might report 100% uptime, but meanwhile, your AI-powered recommendation engine is busy suggesting winter coats to customers in Miami. In July. The system is technically running, but it’s tanking your business outcomes, hurting sales, and creating a terrible customer experience.

This is where the idea of AI observability becomes critical. It's the difference between knowing your system is online and knowing it's delivering the right outcomes.

From a black box to a glass box

The core value is turning opaque "black box" models into transparent "glass boxes." Instead of just seeing system-level metrics, you can finally answer the questions that actually matter to the business:

- Is the model's performance degrading over time? Track accuracy, precision, and other KPIs that directly impact your bottom line.

- Has the input data changed since we trained the model? Spot data drift before it poisons your predictions.

- Is the model showing any bias? Ensure fairness and avoid disastrous PR or regulatory headaches.

- Why did the model make that one weird, unexpected prediction? Get the explainability needed to debug issues and build trust.

The market is waking up to this. The global AI in observability market, valued at USD 1.4 billion in 2023, is expected to explode to USD 10.7 billion by 2033, according to Future Market Insights. This growth isn't just hype; it's driven by the hard-learned lesson that complex AI models can fail silently.

Getting this right often means using specialized tools for different types of AI. For example, the techniques for LLM visibility tracking are quite different from those for a computer vision model.

Ultimately, AI observability provides the context you need to manage risk, ensure compliance, and maximize the ROI of your AI initiatives. It’s the difference between reacting to failures and proactively owning your model’s performance.

Why your standard monitoring tools will fail for AI

If you’re leaning on your existing Application Performance Monitoring (APM) tools to watch over your AI models, you have a massive and dangerous blind spot.

Your APM dashboard can show a perfect wall of green lights - 100% uptime, fast response times - while your AI model is silently failing in ways that directly torpedo your business.

Traditional monitoring tools were built for a different era of software. They are great at answering questions like, "Is the server running?" or "Did the API throw a 500 error?" They track predictable, deterministic systems. AI, however, is neither predictable nor deterministic. It’s probabilistic.

This fundamental difference means your APM can’t see the most critical AI failure modes.

The silent failures APMs can't see

Think about a retail recommendation engine. An APM can confirm the service is online and responding quickly. But it has zero visibility into whether that engine is suddenly suggesting winter coats in July. The system is technically "working," but it's delivering a nonsensical user experience and losing sales by the minute.

This is a business failure, not a system crash. The bizarre case of understanding when AI agents go rogue is a perfect example of how badly things can go wrong without specialized AI observability.

Here are a few specific failure types your current stack will miss completely:

- Data Drift: This is what happens when real-world data starts to look different from the data it was trained on. A fintech model trained on pre-inflation economic data will make dangerously inaccurate loan-risk assessments when fed today’s numbers. Your APM won't notice a thing.

- Concept Drift: This one is more subtle. The data itself might look the same, but what it means has changed. User preferences shift or new slang changes the sentiment of text. Your model's underlying assumptions become obsolete, and its predictions get progressively worse.

- Bias Amplification: A model can start to exhibit discriminatory behavior, leading to flawed financial forecasts or unfair loan application denials. This is a huge compliance and brand risk that is totally invisible to infrastructure monitoring.

These issues aren't edge cases; they are inherent to how AI works in the real world.

Connecting technical gaps to business impact

When you rely on old tools for this new class of problems, you’re creating direct business risk. A "healthy" system can produce outcomes that erode customer trust, generate incorrect business intelligence, and expose you to serious regulatory penalties.

Without a dedicated AI observability strategy, you're essentially flying blind. You can't connect a drop in customer engagement to a subtle decline in model accuracy. You can't explain to an auditor why your AI made a specific, high-stakes decision.

The core takeaway is simple: your standard monitoring stack is necessary, but it's completely insufficient for production AI. It measures the health of the container, not the logic inside it.

The core pillars of a robust AI observability strategy

To really get a handle on what is AI observability, it helps to think in terms of a practical framework. I break it down into four core pillars. Think of them as the foundational pieces you need - whether you build or buy - to get total visibility into your live AI systems.

Standard monitoring sees system health, but AI observability shines a light on those hidden model failures that directly impact customers and the bottom line.

Pillar 1: performance monitoring

This pillar answers the fundamental question: "Is the model actually doing its job well?" Performance monitoring goes way beyond simple server latency. It's about tracking the metrics that tell you if the model is effective.

- Accuracy and quality: Are the model’s predictions any good? For a classification model, this means tracking things like precision and recall. For an LLM, it might be a human-rated quality score for relevance or tone.

- Latency and throughput: How fast is the model, and how much can it handle? A fraud detection model is useless if it’s too slow to stop a bad transaction.

- Business KPIs: How is the model moving the needle on business goals? Think conversion rates for a recommendation engine or ticket deflection for a customer support bot.

Tying model performance directly to business metrics isn't optional. It’s how you prove ROI.

Pillar 2: data and model drift

This pillar tackles one of the biggest silent killers of production AI. Models are trained on a static snapshot of data, but the real world never stops changing. Drift detection answers the critical question: "Is the world today different from the world the model was trained on?"

You need to watch for two main types of drift:

- Data drift: This happens when the statistical properties of your input data change. Imagine a fintech lending model trained before a major economic downturn. Without drift detection, it will start making terrible, outdated loan decisions.

- Model drift (or concept drift): This is when the relationship between the inputs and the outputs changes. A marketing model might learn that a certain ad creative drives clicks, but over time, user tastes change and that creative becomes stale. The model's predictions degrade because the underlying "concept" it learned is no longer valid.

Monitoring for drift is your early warning system. It tells you when it’s time to retrain - before performance takes a nosedive.

Pillar 3: explainability and bias detection

As AI makes more high-stakes decisions, being able to answer "Why did the model do that?" is essential. This pillar is about cracking open the "black box" to make your system transparent. It's crucial for debugging, building trust, and meeting regulatory demands.

Explainability tools help you trace a prediction back to the specific input features that influenced it most. This is a lifesaver when a customer asks why their loan was denied.

Bias detection is a close cousin. It involves continuously auditing your model to make sure it isn't making unfair decisions. An AI-powered hiring tool that systematically filters out candidates from a specific demographic isn't just unethical - it's a massive legal liability.

The push for trustworthy AI is real. A Dynatrace report found that 75% of organizations increased their observability budgets in 2025, with AI-specific features becoming the top reason for choosing new tools. This is a direct reaction to a growing "AI trust gap." You can read the full research on the business impact of AI observability to see how teams are prioritizing this.

Pillar 4: operational health

Finally, this pillar looks at the infrastructure that keeps your model running. It answers the question: "Is the underlying system running efficiently and affordably?"

This includes:

- Cost monitoring: GPU usage for model inference can get expensive, fast. This is about tracking your cost-per-prediction to make sure your AI project is financially sustainable.

- Infrastructure performance: Monitor the health of the servers, containers, and data pipelines that feed your model. A bottleneck can starve your model of fresh information.

- Resource utilization: Are you over-provisioning or under-provisioning your infrastructure? Scale resources to meet demand without burning cash.

When you integrate these operational metrics with your model performance data, you get the complete story. Nailing the infrastructure is key, and getting familiar with the best MLOps platforms will help you build on a solid foundation.

How to implement AI observability: a practical roadmap

Knowing you need AI observability is one thing. Implementing it is another. The key is to start with small, practical wins that deliver immediate value, then build momentum.

This roadmap breaks the process down into four manageable phases. The goal isn't to build a perfect system overnight but to use an iterative approach that shows results quickly.

Phase 1: start with the basics

Your first step is to establish a foundational layer of visibility. You can't monitor what you don't measure.

- Log everything: Start by logging every single prediction your model makes. This must include the model's inputs, its final output, and a unique identifier for each transaction. This raw data is the bedrock of your observability practice.

- Monitor core performance metrics: Track the most critical KPIs for your model. For a classification model, this means monitoring accuracy, precision, and recall. Keep it simple and focused on metrics that map directly to business value.

This initial phase gives you a baseline. For the first time, you'll have a historical record of your model's behavior, invaluable for debugging.

Phase 2: instrument for data and model drift

Once you have basic performance monitoring, the next step is to get ahead of the silent killers. Drift is one of the most common reasons production models degrade, so catching it early is a huge win.

- Input data monitoring: Compare the statistical distribution of live, production data against the data your model was trained on. Look for significant shifts in key features.

- Output data monitoring: Similarly, monitor the distribution of your model's predictions. If a fraud detection model that normally flags 1% of transactions suddenly starts flagging 10%, that’s a major red flag.

Setting up alerts for significant drift is your early warning system. It tells you that the real world has changed and your model might need a retrain before its performance visibly degrades.

Phase 3: build dashboards and alerts

With data flowing, it's time to make it useful. The goal is to create a centralized source of truth for both technical and business stakeholders. A good dashboard translates complex model metrics into clear business insights.

Create tailored views for different audiences:

- Technical dashboard: For the MLOps team, showing latency, error rates, and drift statistics.

- Business dashboard: For product managers, connecting model KPIs to business metrics like revenue or customer satisfaction.

Set up intelligent alerts that go to the right people. An alert about a sudden drop in model accuracy should ping the data science team, while a spike in infrastructure costs should page the MLOps engineer on call.

Cloud deployments now make up a massive 64% share of the AI data observability market, a sector valued at USD 1.0 billion in 2023, per Future Market Insights. You can discover more insights about the AI data observability market from recent industry analysis.

Phase 4: integrate explainability and governance

The final phase is all about building trust and control. As your model's decisions become more impactful, you need the ability to understand why it's making them. This is where you bring in explainability tools.

These tools are crucial for root-cause analysis when a model makes a strange prediction. If a customer asks why their loan was denied, you need to provide a clear, defensible answer.

This phase is also where you formalize governance.

- Define ownership: Who is responsible for the model's performance in production?

- Establish a retraining cadence: How often will you review performance and decide whether to retrain?

- Create an audit trail: Ensure your system maintains an immutable log of all predictions for compliance.

AI observability implementation checklist

This checklist can help guide your team's initial efforts, focusing on the quick wins that build momentum.

- Log all model inputs, outputs, and unique IDs for every prediction.

- Set up basic performance monitoring for 1-2 critical business KPIs.

- Implement statistical checks comparing production vs. training data distributions.

- Set up automated alerts for significant data or concept drift.

- Build a technical dashboard for MLOps with infrastructure and model health metrics.

- Create a business-facing dashboard connecting model KPIs to business outcomes.

- Integrate a basic explainability tool (e.g., SHAP) for critical models.

- Document model ownership, retraining criteria, and an incident response plan.

Common pitfalls to avoid when setting up your system

Putting an AI observability system in place is a massive step forward. But the road is littered with common traps. Knowing these pitfalls ahead of time means you can sidestep costly mistakes.

The problem of alert fatigue

One of the easiest mistakes is setting monitoring thresholds way too tight. Teams create rules that fire off alerts for tiny, statistically meaningless fluctuations. The result is a constant flood of notifications that quickly turns into background noise.

When every minor deviation pages the on-call engineer, your team will eventually start ignoring the alerts. This is alert fatigue, and it creates a massive blind spot where a truly critical issue could easily get lost. Start with looser thresholds and only tighten them once you’ve established a solid baseline.

Focusing only on statistical drift

Detecting data drift is a huge part of AI observability. But if you’re only looking at statistical measures, you're missing the bigger picture.

The real danger often lies in concept drift, a much sneakier problem where the meaning of the data changes. For example, a new slang term trending on social media could completely flip the sentiment of customer reviews, but the statistical properties of the text might look normal. Properly addressing these common AI implementation challenges is essential for building a resilient system.

Lack of clear ownership

When an AI model in production starts to go sideways, who gets the call? If you can't answer that question instantly, you have an ownership problem.

Is it the data science team that built the model? The MLOps team that deployed it? The product manager whose feature it powers? Without a clear owner, problems fall through the cracks. Assign a single, accountable owner for the end-to-end performance of each production model.

Treating observability as a one-time setup

Finally, one of the most dangerous mindsets is treating AI observability as a project you set up once and then walk away from. Your models live in a dynamic world where data, user behavior, and business goals are in constant flux.

Your observability system has to evolve right along with them.

- When you add new features to a model, you have to add them to your monitoring.

- As business priorities shift, the metrics you use to define success will need to change.

- Every time you retrain a model, you need to establish a brand-new performance baseline.

AI observability isn't a "set it and forget it" task. It’s an ongoing process of tuning, adapting, and continuously improving.

Your next step toward trusted AI

When you boil it all down, what is AI observability really about? It comes down to a single, critical business need: trust.

You cannot trust, manage, or scale what you can't see. Your traditional monitoring tools might tell you your systems are online, but they leave you completely blind to the silent, expensive failures unique to AI. This isn't an optional add-on; it's the foundation for any business that relies on AI.

The real problem is that AI models degrade quietly. Data drifts, user behavior changes, and subtle biases creep in - all while your old dashboards show a wall of green lights. The solution is a dedicated practice that gives you clear, continuous visibility into your model's performance, data integrity, and decision logic.

Start with a simple audit of your most critical production model. Get your team together and ask some hard questions:

- Can we confidently explain what data this model is seeing right now?

- Do we know how it’s performing on key business metrics - not just technical ones?

- How fast can we detect if its predictions start to go off the rails?

If the answers are "no" or "we're not sure," then getting some basic monitoring in place is your immediate priority. A full review of your current capabilities is a great place to start. Our guide on performing an AI readiness assessment can give you a structured way to tackle it.

We at N² labs can help. We specialize in moving AI from promising pilots to production-grade systems that deliver real business results. If you're ready to get serious about building trusted, scalable, and measurable AI, we're here to guide you.

Learn more about how we build production-ready AI.

FAQ

Monitoring is about watching for problems you already know can happen. You set up a dashboard to track a specific metric, like model accuracy, and it alerts you when that number drops. It answers, "Is the thing I'm watching still working?"

AI observability is about exploring the unknown. When that monitoring alert goes off, observability provides the context - the logs, traces, and metrics - you need to understand why the problem happened. Monitoring tells you that something is wrong. Observability helps you figure out why.

The moment your first model goes into production and starts touching users or business decisions. You don't need a massive system on day one, but you need a baseline.

- Early stage: Log every prediction and track one core business KPI.

- Growth stage: Add data drift detection and build your first real dashboards.

- Scale-up stage: A comprehensive strategy is non-negotiable, including explainability and governance.

Don’t wait until something breaks to start thinking about this.

AI observability platforms typically focus on four key areas:

- Performance monitoring: Tracking metrics like accuracy, precision, recall, and latency.

- Drift detection: Identifying changes in input data (data drift) or the underlying relationships in the data (concept drift).

- Explainability: Providing tools to understand why a model made a specific prediction.

- Bias and fairness: Auditing models for unintended bias against certain user groups.

AI observability is a critical component of a mature MLOps (Machine Learning Operations) practice. MLOps covers the entire lifecycle of a model - from data prep and training to deployment and monitoring. Observability is the "monitoring" part of MLOps, but supercharged. It provides the feedback loop that tells the MLOps team when a model is degrading and needs to be retrained or updated, making the entire lifecycle more reliable and automated.

Absolutely. Regulations like the EU AI Act and frameworks from NIST demand transparency, risk management, and human oversight for high-risk AI systems. The excuse of "the model is a black box" no longer works.

AI observability provides the technical backbone to meet these requirements. It creates an auditable trail of your model’s lifecycle, giving you tangible proof of data lineage, decision logging, and performance audits for bias and decay. Without this visibility, demonstrating compliance is a nearly impossible, manual nightmare.